Voters Are Concerned About ChatGPT and Support More Regulation of AI

By Suhan Kacholia

In November 2022, OpenAI released ChatGPT, a versatile large language model (LLM) that can write code, draft convincing text, and more. ChatGPT has helped people save time and unlock new possibilities in everything from academic research to game creation. However, some have raised concerns about ChatGPT displacing jobs, propagating misinformation, and disrupting education, healthcare, and journalism, among other sectors.

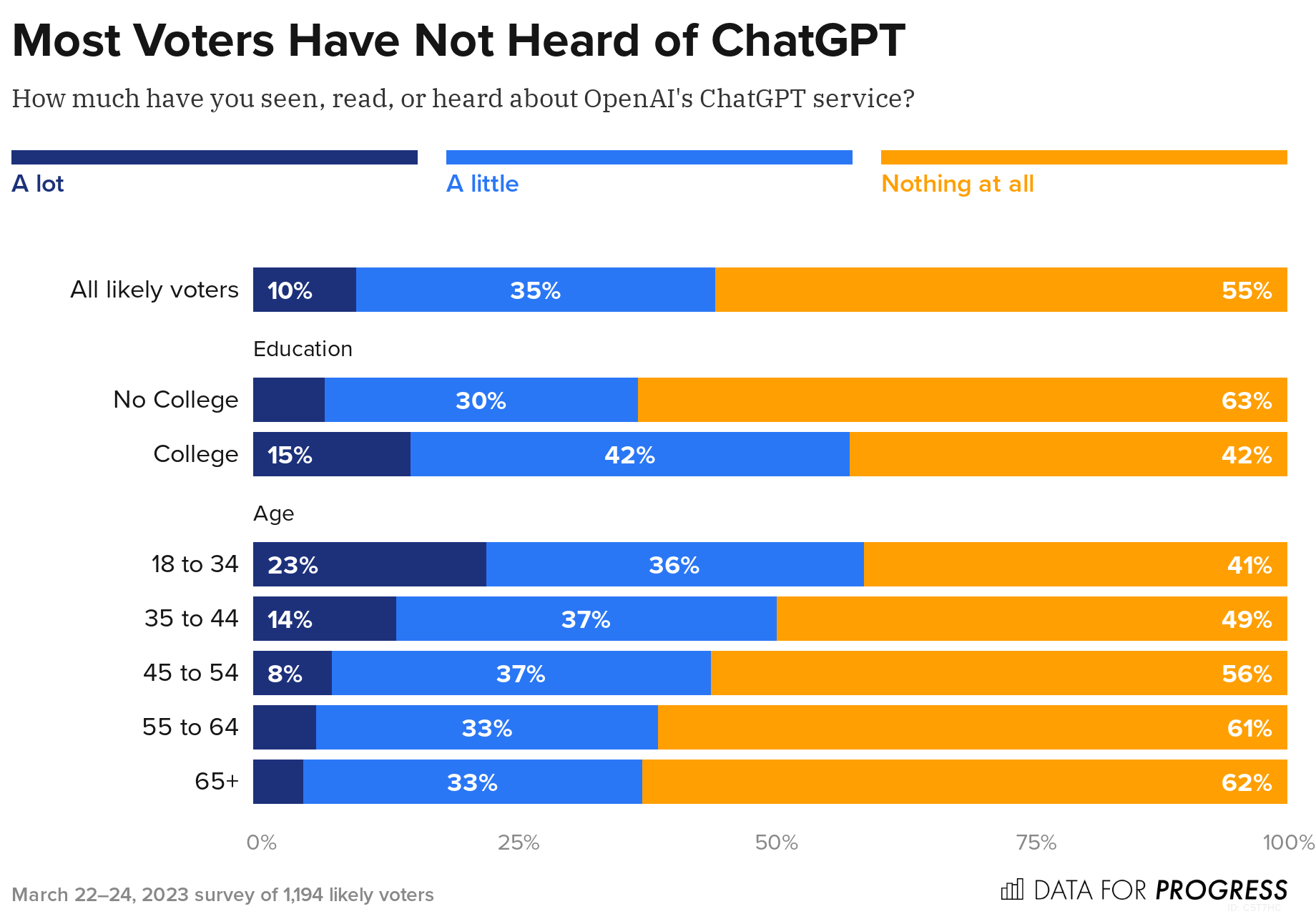

Despite significant media coverage of artificial intelligence (AI) and large language models, March polling by Data For Progress reveals that most voters (55 percent) have heard “nothing at all” about ChatGPT, with only 10 percent stating they have heard “a lot.”

However, awareness varies by education status and age. A majority of college-educated voters (57 percent) have heard at least “a little” about ChatGPT, while 63 percent of non-college-educated voters have heard nothing at all. A majority (62 percent) of voters above 65 have heard nothing at all, while a majority of voters between 18 and 34 (59 percent) and between 35 and 44 (51 percent) have heard at least a little.

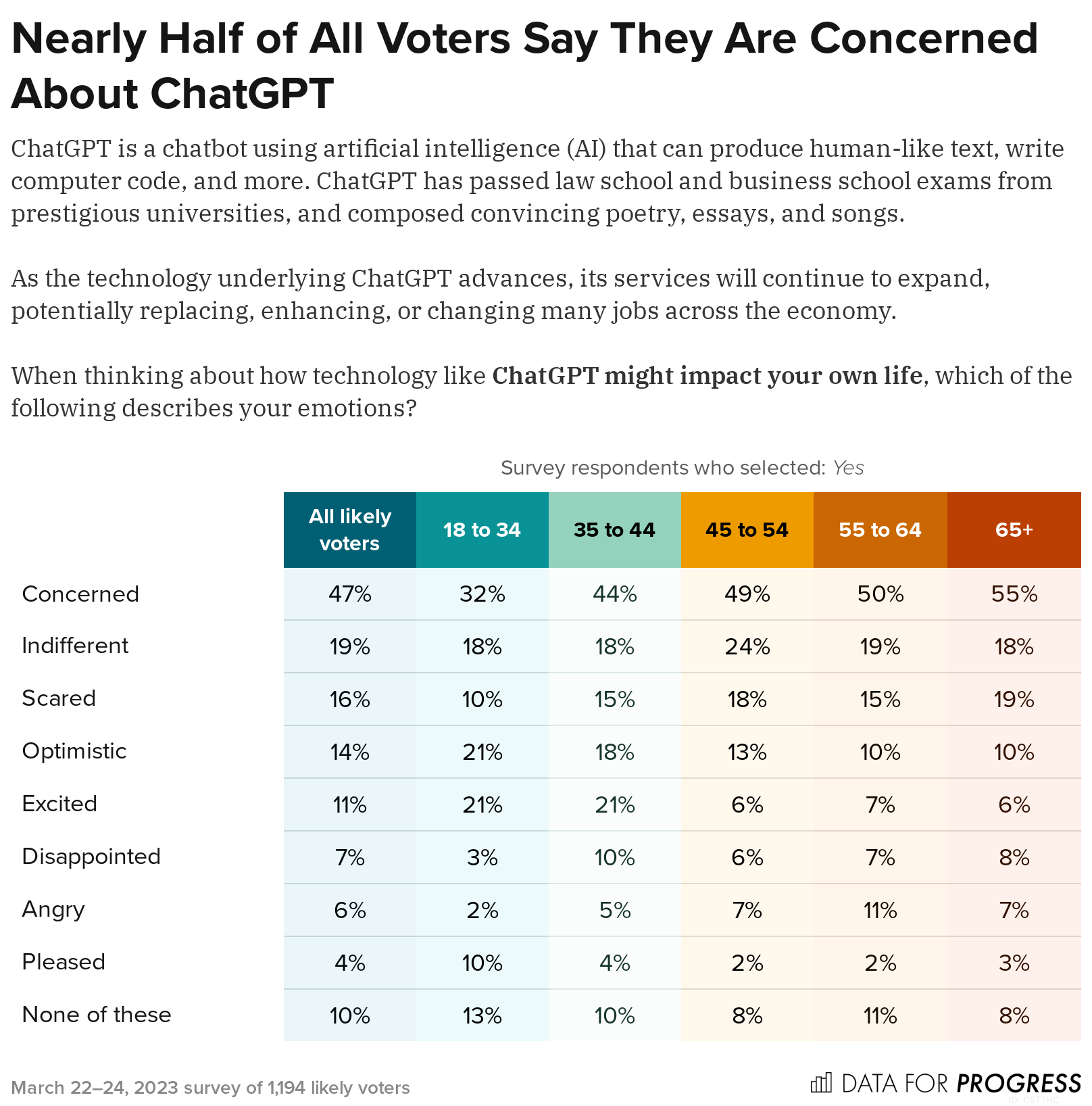

After seeing context about the features of ChatGPT and its potential consequences for different industries, a plurality of voters report they are “concerned” (47 percent) about how it might impact their lives. Nineteen percent say they are "indifferent," while 16 percent say they are "scared."

However, younger voters are more enthusiastic about ChatGPT. While a majority of voters (55 percent) above 65 are concerned about the tool, only 32 percent of voters between 18 and 34 are. Those younger voters are also more likely to state they are “excited” (21 percent) or “optimistic” (21 percent) about the technology.

AI capabilities are advancing rapidly. Just two months ago, OpenAI released GPT-4, an even more capable successor to ChatGPT. At the same time, AI remains poorly understood, with the inner workings of models often being an uninterpretable “black box” to humans.

In light of the risks posed by AI, many experts and commentators have suggested slowing down AI development to ensure there is time to understand and appropriately regulate the technology.

A majority of respondents (56 percent) agree, stating they prefer that the U.S. slow down AI progress, even if that entails sacrificing short-term growth and international competitiveness. This includes 58 percent of Republicans, 57 percent of Independents, and 52 percent of Democrats.

In a January New York Times op-ed, Representative Ted Lieu proposed creating a dedicated federal agency to regulate AI. Such an agency would be staffed by artificial intelligence experts and be capable of responding swiftly to AI developments by regulating standards for both the creation and use of AI systems.

By a +35-point margin, voters across party lines support establishing such an agency. Democrats support the proposal by an overwhelming +63-point margin, while Republicans back it by a margin of +18 points.

When OpenAI released GPT-4, the company did not disclose information about the text or image data used to train it. Data influences the behavior of AI models. For instance, if a model is only trained on academic texts, it may create texts with a scholarly tone. Feeding LLMs toxic, inaccurate, or conspiratorial text could thus lead to disturbing and biased behavior.

Large language models, like GPT-4, are important technologies with significant risks. GPT-4’s own system card showed that it is capable of lying to humans to have them solve a CAPTCHA and ordering research chemicals online. If prompted using a “jailbreak,” it can produce hateful vitriol.

Requiring AI companies to release the data used to train influential models could help mitigate the risks and biases of their technologies. An overwhelming majority (79 percent) of voters support such a requirement, including 83 percent of Democrats, 78 percent of Independents, and 76 percent of Republicans.

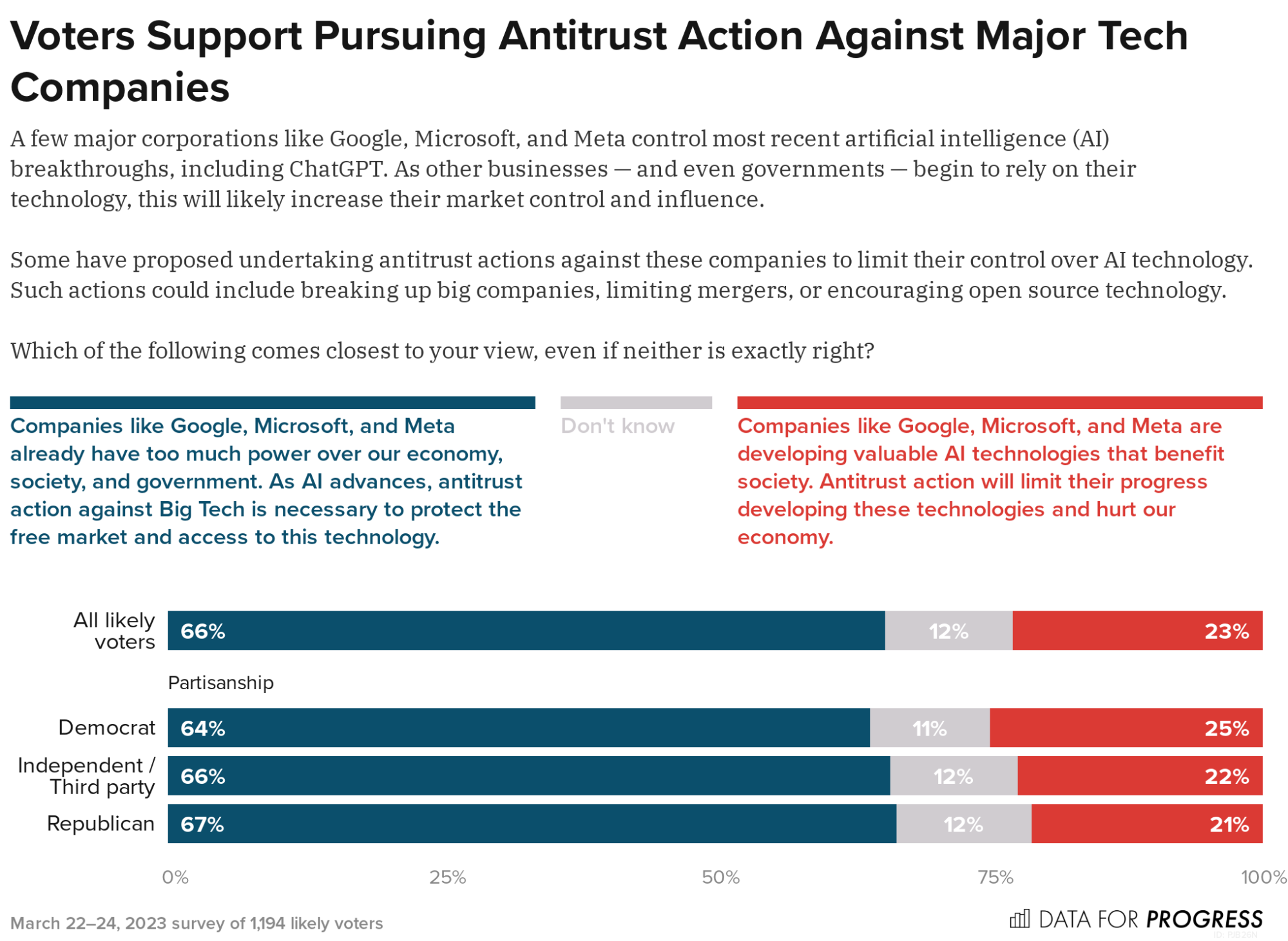

Many prominent AI applications come from a few companies, including Google and Microsoft (which holds a major stake in OpenAI). AI models like GPT might become the backbone for various apps and tools — especially as OpenAI rolls out “plugins” that connect GPT to external services. This could substantially increase the influence of already powerful large tech companies.

When presented with information about the ownership of AI models like ChatGPT, roughly two-thirds of voters across party lines (66 percent) support taking antitrust action against major tech companies. This includes 64 percent of Democrats, 66 percent of Independents, and 67 percent of Republicans.

After learning about what ChatGPT does, voters are concerned about its impact on their lives and prefer a slow, cautious approach to deploying the technology. Voters across party lines support significant regulations on AI, including the establishment of a dedicated federal agency, mandated data transparency practices, and antitrust enforcement against large AI companies. Policymakers should take voter concerns into account when regulating AI, ensuring its benefits are distributed broadly and the technology develops in a safe, responsible manner.

Suhan Kacholia (@SuhanKacholia1) is a polling intern at Data for Progress.